Important

Interface change

We will rename the volume types as follows: Ceph to standard, CephBulk to bulk, CephIntensive to fast, and CephSSD to legacy

Storage

The HPC Cloud offers both block storage, in the form of disk volumes which can be directly attached to a server, and file storage, in the form of shared filesystems which can be NFS-mounted by the operating system. In addition, there is an object store providing containers (also called buckets) which allow data to be accessed from multiple clients though standard REST APIs. 1 The block and object storage services are based on Ceph, while file storage offers a choice between CephFS and IBM Storage Scale (GPFS). The latter is also mounted on Raven (by default) as well as Robin (on request) for efficient exchange of data between the various systems.

Block

Storage volumes are logically independent block devices of arbitrary size. They can be attached to a running server and later detached or even reattached to a different server. 2 Typically, volume are used to store data which should persist beyond the lifetime of a single instance, although they are also useful as “scratch” space.

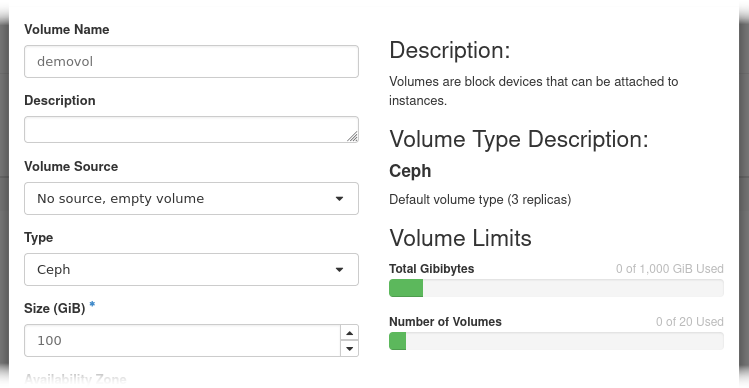

Create an empty volume via the

button on Project / Volumes / Volumes.

The maxiumum allowable size depends on your project’s quota.

The default volume type Ceph stores data on an HDD-based pool.

There is also type CephSSD, available on request, which targets an SSD-based pool, offering potentially higher performance and lower latency.

button on Project / Volumes / Volumes.

The maxiumum allowable size depends on your project’s quota.

The default volume type Ceph stores data on an HDD-based pool.

There is also type CephSSD, available on request, which targets an SSD-based pool, offering potentially higher performance and lower latency.

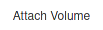

Select a server from Project / Compute / Instances, perform the

action, and pick the newly created volume.

action, and pick the newly created volume.The device names /dev/vdb of volumes are not guaranteed to be the same each time they are attached to a server. To definitively identify a volume look into /dev/disks/by-id. Volumes will appear as entries virtio-ID where ID is the first 20 characters of the OpenStack volume ID. For example:

openstack volume list --name VOLUME +--------------------------------------+--------+-----------+------+-------------+ | ID | Name | Status | Size | Attached to | +--------------------------------------+--------+-----------+------+-------------+ | 708e61cf-b80a-4488-9a32-eb532715ce38 | VOLUME | available | 10 | | +--------------------------------------+--------+-----------+------+-------------+

Will appear as /dev/disk/by-id/virtio-708e61cf-b80a-4488-9 on the server. It is also possible to look up the device name /dev/vdb from here in the “attached to” column).

Important

We strongly advice to use the disk ID to positively identify the block device on the server with an OpenStack volume

Create and mount a new filesystem using the following example commands:

mkfs.xfs /dev/disk/by-id/virtio-708e61cf-b80a-4488-9

mkdir /demovol

echo "/dev/disk/by-id/virtio-708e61cf-b80a-4488-9 /demovol xfs defaults 0 0" >> /etc/fstab

mount /demovol

CLI Example

openstack volume create VOLUME --size SIZE [--type TYPE]

openstack server add volume SERVER VOLUME

Tip

It is possible to migrate an existing volume to a new type online, even while still attached to a the server. Simply click the “Change Volume Type” action, choose the target type, and set the migration policy to “On Demand”. The data will then be transparently copied to the new backend storage pool.

openstack volume set VOLUME --type TYPE --retype-policy on-demand

Object

The HPC Cloud includes an object store for saving and retrieving data through a publicly-accessible REST API. Both OpenStack Swift- and S3-style APIs are supported. Objects are stored in containers (or buckets) which in turn belong to the project (or tenant). Folders within buckets are supported but typically handled as a part of the object name.

Important

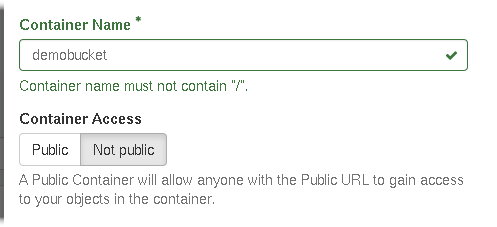

Similar to Amazon’s simple storage service we use a global namespace for our buckets. Please think of a unique name for your bucket. If another bucket of the same name already exists you will see an error message stating: Error: Forbidden insufficient permissions on requests operation.

Important

Please be aware that the contents of public buckets are not only visible but can be modified by anyone on the internet.

Create a new bucket via the

button on

Project / Object Store / Containers.

Note that buckets, both public and private, share a global namespace. If a

bucket name is already taken by another project you will receive an error

message. Please use the S3 bucket naming rules. This will help avoid possible problems when accessing objects via the S3 API.

button on

Project / Object Store / Containers.

Note that buckets, both public and private, share a global namespace. If a

bucket name is already taken by another project you will receive an error

message. Please use the S3 bucket naming rules. This will help avoid possible problems when accessing objects via the S3 API.

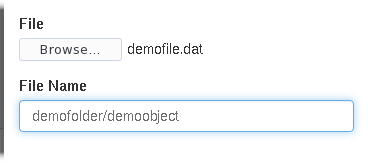

Select the bucket and click the

button to upload a file. The

object name (confusingly labeled “File Name”) defaults to the filename, but

may be an arbitrary string. You may create a folder for the object at the

same time by prepending one or more names, separated by “/”.

button to upload a file. The

object name (confusingly labeled “File Name”) defaults to the filename, but

may be an arbitrary string. You may create a folder for the object at the

same time by prepending one or more names, separated by “/”.

If you created a public bucket, the contents of the file would then be available at, e.g.: https://objectstore.hpccloud.mpcdf.mpg.de/swift/v1/demobucket/demofolder/demoobject or https://objectstore.hpccloud.mpcdf.mpg.de/demobucket/demofolder/demoobject

CLI Example

openstack container create BUCKET

openstack object create BUCKET FILE --name FOLDER/OBJECT

Object store quotas are separate from those of other storage types, and are not displayed on the dashboard. For questions about your quota, please contact the helpdesk. Note that while the Ceph backend itself supports very large objects, uploads through the dashboard and Swift API are limited to 5GB. 3 Use the S3 API to avoid this limitation.

Tip

The object store supports a subset of the Amazon S3 API. See CLI and Scripting to get started with s3cmd and/or Boto3.

- 1

Note that the term container is unrelated to Docker containers. Where possible we use bucket to avoid confusion.

- 2

Attaching a volume to multiple servers simultaneously is not supported.

- 3

The

openstackcommand also uses the Swift API and is therefore subject to the same limitation. Note that the--segment-sizefeature of the olderswiftcommand splits the file into a collection of smaller objects which cannot easily be downloaded by other clients.