Command Line Interface and Scripting

Attention

The dashboard and API are now located at https://hpccloud.mpcdf.mpg.de/. Existing config files pointing to https://rdocloud.mpcdf.mpg.de/ will continue to function.

The HPC Cloud includes a comprehensive REST API to enable command line usage and scripting. Internal MPCDF network access is not required to use the API; like the dashboard, it is accessible from MPG networks. If you can load the dashboard then you should be able to reach the API endpoints as well. Utilizing the API typically requires a specific set of credentials along with locally-installed software. 1

Preparation

Login to the dashboard and set the active project as described in Quick Start.

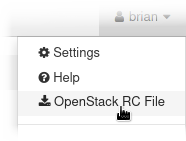

Use the dropdown menu at the upper right or follow this link to generate a project-specific OpenStack RC file and save it to your local machine. This file contains the necessary environment variables.

Most resources can be managed via the unified command line client. This Python-based software is available for many Linux distributions, most commonly as “python-openstackclient”. If it is not available on your machine, or you would prefer to use the latest stable version, simply install it with pip, e.g.:

pip install --user openstackclientDepending on your local environment and preferences, you may want to add pip’s directory to the search path withexport PATH=~/.local/bin:$PATH. To make the additional search path persistent, add the same command to~/.profileor~/.bash_profile.

Command line usage

To use the command line client you must first activate the RC file for the current terminal session (and enter your password):

source PROJECT-openrc.sh

At this point the openstack command is usable without any additional parameters. 2

For example, the following prints a list of virtual machines in a human-readable format:

openstack server list

Scripting

The most basic scripting option is to call openstack from a shell script, e.g. to automate tasks, generate reports, etc.

The -f/--format and -c/--column options are handy when feedback is required, as they make the output easier to parse than the default table format.

The following example uses the plaintext output to run a ping-check on all running servers.

#!/bin/bash

for nets in $(openstack server list --status active -f value -c Networks) ; do

for addr in $(echo $nets | grep -Po "\d{1,3}.\d{1,3}.\d{1,3}.\d{1,3}") ; do

echo -n "Ping $addr ... "

ping -w1 $addr > /dev/null && echo OK || echo FAIL

done

done

Also of interest are the json and yaml formats, which enable further processing of the output with jq and yq, respectively, or any other program that accepts these standards as input.

Language bindings

More complicated automation is perhaps better implemented using the bindings for your favorite programming language, such as the Python OpenStack SDK. Here is the Python version of the previous example:

#!/usr/bin/env python3

import openstack

import subprocess

conn = openstack.connect(cloud='envvars')

for nets in [srv.addresses for srv in conn.compute.servers(status='active')]:

for net in nets.values():

for ip in net:

print('Ping ' + ip['addr'] + ' ... ', end='')

ping = subprocess.run('ping -w1 ' + ip['addr'], shell=True, stdout=subprocess.DEVNULL)

print('OK' if ping.returncode == 0 else 'FAIL')

Naturally the command-line-based procedures listed in “CLI example” boxes are also achievable via language bindings. Here is another Python-based example, based on Quick Start.

#!/usr/bin/env python3

import openstack

conn = openstack.connect(cloud='envvars')

keypair = conn.compute.create_keypair(name='KEYNAME')

with open('KEYFILE', 'w') as f:

f.write(keypair.private_key)

conn.compute.create_server(name='SERVERNAME',

image_id=conn.compute.find_image('Ubuntu 20.04').id,

flavor_id=conn.compute.find_flavor('mpcdf.small').id,

networks=[{"uuid": conn.network.find_network('cloud-local-1').id}],

key_name=keypair.name)

Alternatives

Depending on your automation goals, it may also be worth considering the Ansible cloud modules for OpenStack or even the built-in orchestration service.

S3 API

The object storage service provides an S3-compatible API to support many common clients. Note that the RC file used above is not sufficient by itself to use the S3 API. Instead, you must generate an access/secret pair from your domain/user/project credentials with:

openstack ec2 credentials create

You may create more than one pair per project, for example to avoid sharing

credentials between applications, but each will grant the same level of access

to the project’s buckets. From here on the endpoint

objectstore.hpccloud.mpcdf.mpg.de plus the keys generated above are all

that is needed for many S3 clients to use the object store. Unlike the

dashboard and OpenStack APIs, the S3 (and Swift) endpoints are not limited to

MPG networks.

For example, the s3cmd config file ~/.s3cfg

should contain (at least):

[default]

host_base = objectstore.hpccloud.mpcdf.mpg.de

host_bucket = objectstore.hpccloud.mpcdf.mpg.de

access_key = ACCESS

secret_key = SECRET

You can then create a bucket and upload a file:

s3cmd mb s3://BUCKET

s3cmd put FILE s3://BUCKET/FOLDER/OBJECT

Upload performance may benefit from a larger chunk size:

s3cmd --multipart-chunk-size-mb=1000 put ...

There are also separate language bindings for the S3 API, such as Boto3. Here is a Python version of the above operations.

#!/usr/bin/env python3

import boto3

s3 = boto3.client(

's3',

endpoint_url='https://objectstore.hpccloud.mpcdf.mpg.de',

aws_access_key_id='ACCESS',

aws_secret_access_key='SECRET'

)

s3.create_bucket(Bucket='BUCKET')

s3.upload_file('FILE', 'BUCKET', 'FOLDER/OBJECT')

Note

Not all features of AWS S3 are supported. See the Ceph documentation for details.

- 1

Technically, it is also possible to access the APIs directly via curl, but this is primarily used for development and testing.

- 2

If you prefer not to use environment variables, the credentials can also be passed explicitly, e.g.:

openstack \ --os-auth-url https://hpccloud.mpcdf.mpg.de:13000/v3 \ --os-domain-name mpcdf \ --os-project-name PROJECT \ --os-username USER ...